Next 2-3 Years AI Unleashing 10X Productivity on Business and the World

Written by admin on October 26, 2025

An AI expert from Anthropic predicts a mostly smooth transition for AI over the next 2-3 years where business adopts best practice AI that is unleashing 10X productivity. This will be great value creation that would payoff the trillions we are and will invest.

Perfecting and scaling what AI is capable of now and what can make it do better with improved software architectures to ensure quality and verify results looks useful, valuable and not scary.

This looks like we avoid the fears of big problems of getting too little or too much AI.

The too little AI problem is a crash where the world cannot get return on trillions in investment.

The too much AI problem is where AI is so good and so powerful that humans become useless and humans lose control.

A world in three to five years where we have absorbed and disseminated 10X productivity that still mainly helps top human performers deliver big results would be ready to make another step up in 10-100X productivity.

Human civilization would be safely surfing an AI technology wave that continues the industrial and information revolutions where GDP increased 100 times. We would be in the Goldie Locks zone. Not too much, not too little. Just right.

2026–2027 Predictions: Full-Day Agents, Expert Parity, and GDP-Val

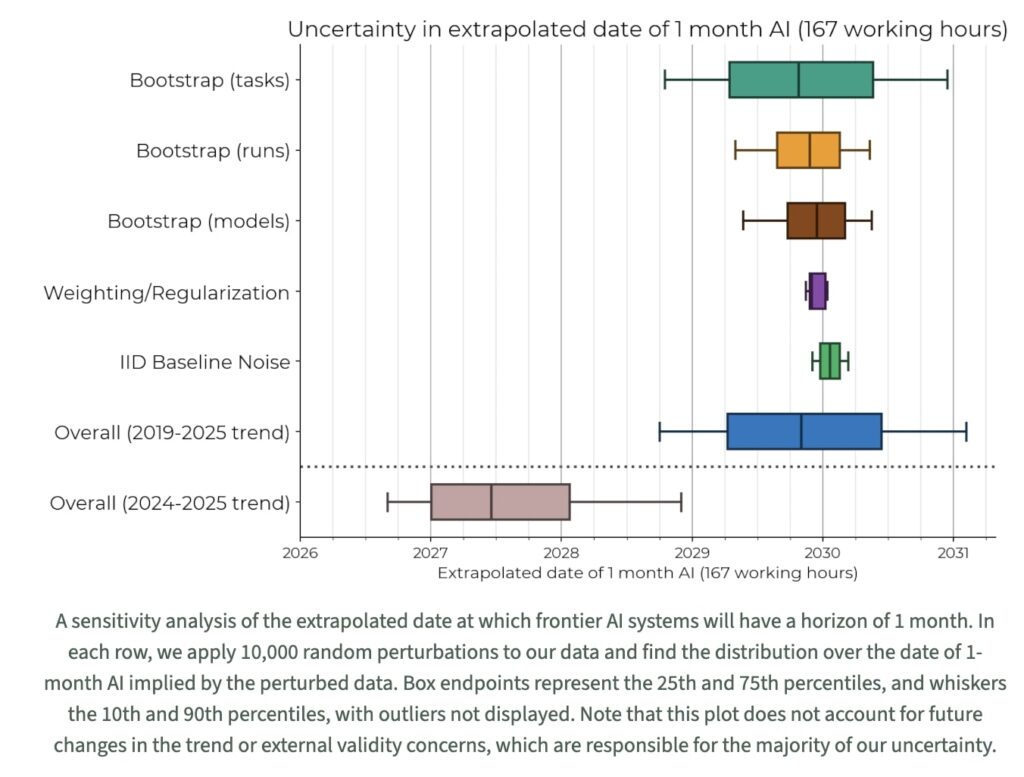

Naive extrapolations (METR, others):

Mid-2026: Models autonomous for a full day (e.g., implement app features, research reports)—key for delegation (10-min vs. hour-long runs enable “teams” of agents).

Late 2026: One model matches experts across occupations (per OpenAI’s GDP-Val: Real-world tasks from domain pros, benchmarked vs. humans).

2027: Frequent expert outperformance on many tasks.

Move 37: AI Creativity and Novelty

Iconic 2016 AlphaGo moment (Game 2 vs. Lee Sedol): Unexpected, “creative” move stunned pros (commentator: “Truly novel”). Not rote imitation—AI samples probability distributions for infinite novelty. Modern LLMs generate diverse outputs (e.g., unseen code/papers), but “Move 37” needs: Hard tasks + diverse ideas + accurate evaluation (easy to create junk; hard to ensure utility). Pre-training aids via distributions; sampling unlocks non-human paths.

Novel Science: From AlphaCode/AlphaTensor to Nobel-Worthy Breakthroughs

AlphaCode (novel programs), AlphaTensor (algorithms), and recent DeepMind/Yale biomed discoveries prove AI invents. Trajectory: Scaling impressiveness—2026 likely sees “super impressive” (consensus-level) novelties; debates fade as clarity grows. Nobel timeline: 2027 capability (solo breakthroughs rival AlphaFold’s 2024 win); awards lag (2028+). Excitement: Unlocks universe mysteries, living standards (e.g., aging halt, climate fixes).

Discontinuity vs. Smooth Progress: No Sudden Takeoff

AI 2027 singularity (AI self-improves via novel science) unlikely—smooth productivity ramps (researchers already use AI). Key: Does AI productivity offset rising difficulty (easy problems first; cf. pharma: $B drugs vs. accidental antibiotics)? Normal: Exponential research effort for linear gains. Unlikely acceleration (unlike fields needing more humans)

We’d see weekly signals (10x jumps)—pause if unclear. Optimistic: Balanced trends sustain linear progress.

Jobs: Complementarity, Not Replacement

AI ≠ human intelligence—superhuman calc, subpar others. Gradual synergy (comparative advantage): Offload drudgery (Schrittwieser: Claude refactors UI)

humans excel integration/creativity. Chess/Go: Enhanced (AI tutors/streams; easier solo practice).

Coding/study: Raises idea-to-output bar.

Inequality? Hard—lifts floor (anyone builds) but amplifies top performers

cCountry diffs via taxes/redistribution.

Nonzero-sum: Grow pie (agri/industrial parallels)—

10x productivity → abundance (medicine, energy, materials).

Political fix: Democratic wealth-sharing (tech can’t. 40-hour weeks persist despite gains).

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.